Bumps [deepdiff](https://github.com/seperman/deepdiff) from 6.7.1 to 8.6.1. <details> <summary>Release notes</summary> <p><em>Sourced from <a href="https://github.com/seperman/deepdiff/releases">deepdiff's releases</a>.</em></p> <blockquote> <h2>8.5.0</h2> <ul> <li>Updating deprecated pydantic calls</li> <li>Switching to pyproject.toml</li> <li>Fix for moving nested tables when using iterable_compare_func. by</li> <li>Fix recursion depth limit when hashing numpy.datetime64</li> <li>Moving from legacy setuptools use to pyproject.toml</li> </ul> <h2>8.4.1</h2> <ul> <li>pytz is not required.</li> </ul> <h2>8.4.0</h2> <ul> <li>Adding BaseOperatorPlus base class for custom operators</li> <li>default_timezone can be passed now to set your default timezone to something other than UTC.</li> <li>New summarization algorithm that produces valid json</li> <li>Better type hint support</li> </ul> <h2>8.1.1</h2> <p>Adding Python 3.13 to setup.py</p> <h2>8.1.0</h2> <ul> <li>Removing deprecated lines from setup.py</li> <li>Added <code>prefix</code> option to <code>pretty()</code></li> <li>Fixes hashing of numpy boolean values.</li> <li>Fixes <strong>slots</strong> comparison when the attribute doesn't exist.</li> <li>Relaxing orderly-set reqs</li> <li>Added Python 3.13 support</li> <li>Only lower if clean_key is instance of str <a href="https://redirect.github.com/seperman/deepdiff/issues/504">#504</a></li> <li>Fixes issue where the key deep_distance is not returned when both compared items are equal <a href="https://redirect.github.com/seperman/deepdiff/issues/510">#510</a></li> <li>Fixes exclude_paths fails to work in certain cases</li> <li>exclude_paths fails to work <a href="https://redirect.github.com/seperman/deepdiff/issues/509">#509</a></li> <li>Fixes to_json() method chokes on standard json.dumps() kwargs such as sort_keys</li> <li>to_dict() method chokes on standard json.dumps() kwargs <a href="https://redirect.github.com/seperman/deepdiff/issues/490">#490</a></li> <li>Fixes accessing the affected_root_keys property on the diff object returned by DeepDiff fails when one of the dicts is empty</li> <li>Fixes accessing the affected_root_keys property on the diff object returned by DeepDiff fails when one of the dicts is empty <a href="https://redirect.github.com/seperman/deepdiff/issues/508">#508</a></li> </ul> <p>8.0.1 - extra import of numpy is removed</p> <h2>8.0.0</h2> <p>With the introduction of <code>threshold_to_diff_deeper</code>, the values returned are different than in previous versions of DeepDiff. You can still get the older values by setting <code>threshold_to_diff_deeper=0</code>. However to signify that enough has changed in this release that the users need to update the parameters passed to DeepDiff, we will be doing a major version update.</p> <ul> <li><input type="checkbox" checked="" disabled="" /> <code>use_enum_value=True</code> makes it so when diffing enum, we use the enum's value. It makes it so comparing an enum to a string or any other value is not reported as a type change.</li> <li><input type="checkbox" checked="" disabled="" /> <code>threshold_to_diff_deeper=float</code> is a number between 0 and 1. When comparing dictionaries that have a small intersection of keys, we will report the dictionary as a <code>new_value</code> instead of reporting individual keys changed. If you set it to zero, you get the same results as DeepDiff 7.0.1 and earlier, which means this feature is disabled. The new default is 0.33 which means if less that one third of keys between dictionaries intersect, report it as a new object.</li> <li><input type="checkbox" checked="" disabled="" /> Deprecated <code>ordered-set</code> and switched to <code>orderly-set</code>. The <code>ordered-set</code> package was not being maintained anymore and starting Python 3.6, there were better options for sets that ordered. I forked one of the new implementations, modified it, and published it as <code>orderly-set</code>.</li> <li><input type="checkbox" checked="" disabled="" /> Added <code>use_log_scale:bool</code> and <code>log_scale_similarity_threshold:float</code>. They can be used to ignore small changes in numbers by comparing their differences in logarithmic space. This is different than ignoring the difference based on significant digits.</li> <li><input type="checkbox" checked="" disabled="" /> json serialization of reversed lists.</li> <li><input type="checkbox" checked="" disabled="" /> Fix for iterable moved items when <code>iterable_compare_func</code> is used.</li> <li><input type="checkbox" checked="" disabled="" /> Pandas and Polars support.</li> </ul> <h2>7.0.1</h2> <ul> <li><input type="checkbox" checked="" disabled="" /> When verbose=2, return <code>new_path</code> when the <code>path</code> and <code>new_path</code> are different (for example when ignore_order=True and the index of items have changed).</li> </ul> <!-- raw HTML omitted --> </blockquote> <p>... (truncated)</p> </details> <details> <summary>Commits</summary> <ul> <li>See full diff in <a href="https://github.com/seperman/deepdiff/commits">compare view</a></li> </ul> </details> <br /> [](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores) Dependabot will resolve any conflicts with this PR as long as you don't alter it yourself. You can also trigger a rebase manually by commenting `@dependabot rebase`. [//]: # (dependabot-automerge-start) [//]: # (dependabot-automerge-end) --- <details> <summary>Dependabot commands and options</summary> <br /> You can trigger Dependabot actions by commenting on this PR: - `@dependabot rebase` will rebase this PR - `@dependabot recreate` will recreate this PR, overwriting any edits that have been made to it - `@dependabot merge` will merge this PR after your CI passes on it - `@dependabot squash and merge` will squash and merge this PR after your CI passes on it - `@dependabot cancel merge` will cancel a previously requested merge and block automerging - `@dependabot reopen` will reopen this PR if it is closed - `@dependabot close` will close this PR and stop Dependabot recreating it. You can achieve the same result by closing it manually - `@dependabot show <dependency name> ignore conditions` will show all of the ignore conditions of the specified dependency - `@dependabot ignore this major version` will close this PR and stop Dependabot creating any more for this major version (unless you reopen the PR or upgrade to it yourself) - `@dependabot ignore this minor version` will close this PR and stop Dependabot creating any more for this minor version (unless you reopen the PR or upgrade to it yourself) - `@dependabot ignore this dependency` will close this PR and stop Dependabot creating any more for this dependency (unless you reopen the PR or upgrade to it yourself) You can disable automated security fix PRs for this repo from the [Security Alerts page](https://github.com/milvus-io/milvus/network/alerts). </details> Signed-off-by: dependabot[bot] <support@github.com> Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

Guidelines for Test Framework

This document guides you through the Pytest-based PyMilvus test framework.

You can find the test code on GitHub.

Quick Start

Deploy Milvus

To accommodate the variety of requirements, Milvus offers as many as four deployment methods. PyMilvus supports Milvus deployed with any of the methods below:

-

Install with Docker Compose

-

Install on Kunernetes

-

Install with KinD

For test purposes, we recommend installing Milvus with KinD. KinD supports the ClickOnce deployment of Milvus and its test client. KinD deployment is tailored for scenarios with small data scale, such as development/debugging test cases and functional verification.

-

Prerequisites

-

Install KinD with script

- Enter the local directory of the code */milvus/tests/scripts/

- Build the KinD environment, and execute CI Regression test cases automatically:

$ ./e2e-k8s.sh- By default, KinD environment will be automatically cleaned up after the execution of the test case. If you need to keep the KinD environment:

$ ./e2e-k8s.sh --skip-cleanup- Skip the automatic test case execution and keep the KinD environment:

$ ./e2e-k8s.sh --skip-cleanup --skip-test --manual

Note: You need to log in to the containers of the test client to proceed manual execution and debugging of the test case.

- See more script parameters:

$ ./e2e-k8s.sh --help

- Export cluster logs:

$ kind export logs .

PyMilvus Test Environment Deployment and Case Execution

We recommend using Python 3 (3.8 or higher), consistent with the version supported by PyMilvus.

Note: Procedures listed below will be completed automatically if you deployed Milvus using KinD.

-

Install the Python package prerequisite for the test, enter */milvus/tests/python_client/, and execute:

$ pip install -r requirements.txt -

The default test log path is /tmp/ci_logs/ under the config directory. You can add environment variables to change the path before booting up test cases:

$ export CI_LOG_PATH=/tmp/ci_logs/test/

| Log Level | Log File |

|---|---|

debug |

ci_test_log.debug |

info |

ci_test_log.log |

error |

ci_test_log.err |

-

You can configure default parameters in pytest.ini under the root path. For instance:

addopts = --host *.*.*.* --html=/tmp/ci_logs/report.html

where host should be set as the IP address of the Milvus service, and *.html is the report generated for the test.

-

Enter testcases directory, run following command, which is consistent with the command under the pytest framework, to execute the test case:

$ python3 -W ignore -m pytest <test_file_name>

An Introduction to Test Modules

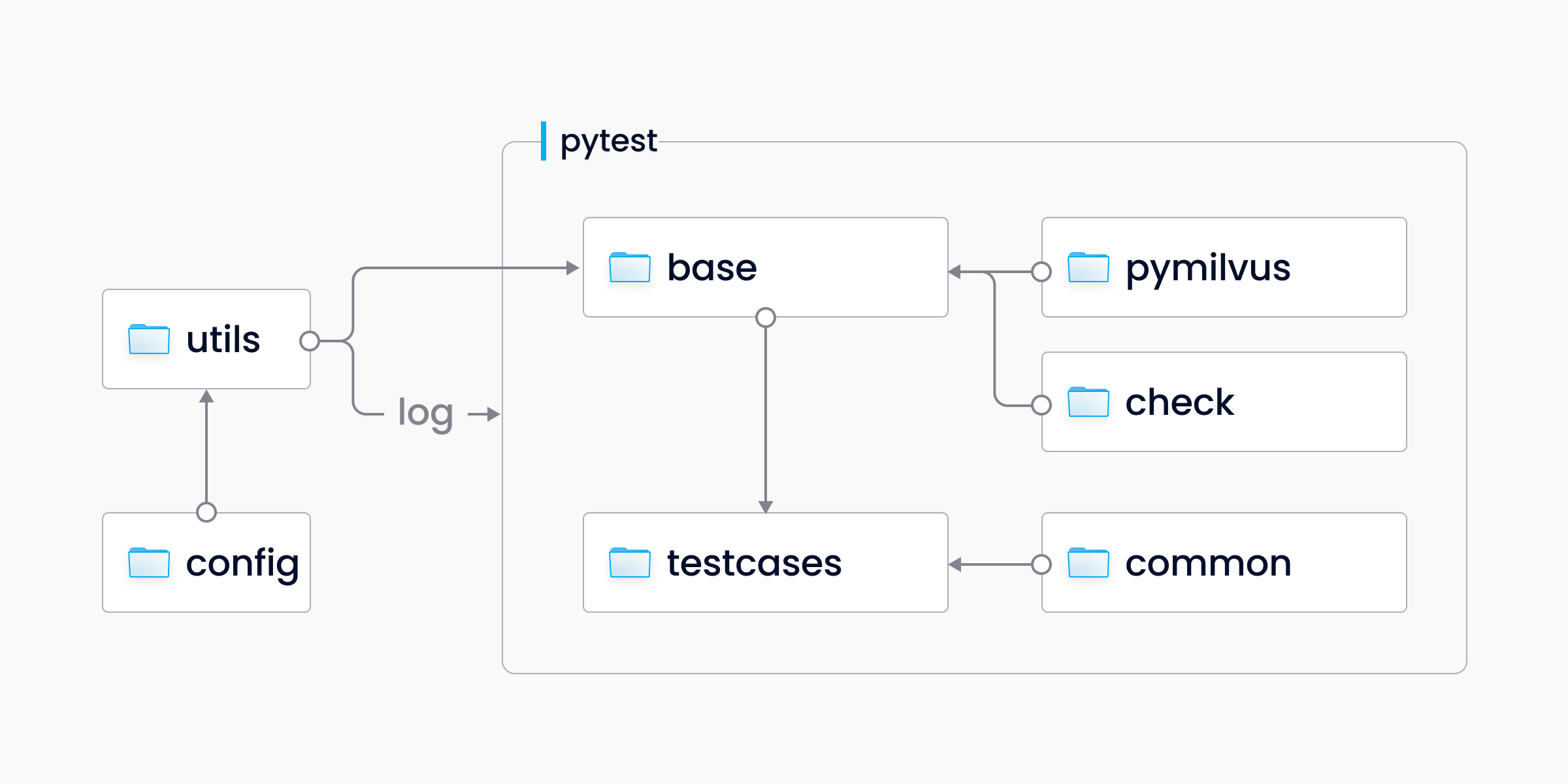

Module Overview

Working directories and files

- base: stores the encapsulated PyMilvus module files, and setup & teardown functions for pytest framework.

- check: stores the check module files for returned results from interface.

- common: stores the files of common methods and parameters for test cases.

- config: stores the basic configuration file.

- testcases: stores test case scripts.

- utils: stores utility programs, such as utility log and environment detection methods.

- requirements.txt: specifies the python package required for executing test cases

- conftest.py: you can compile fixture functions or local plugins in this file. These functions and plugins implement within the current folder and its subfolder.

- pytest.ini: the main configuration file for pytest.

Critical design ideas

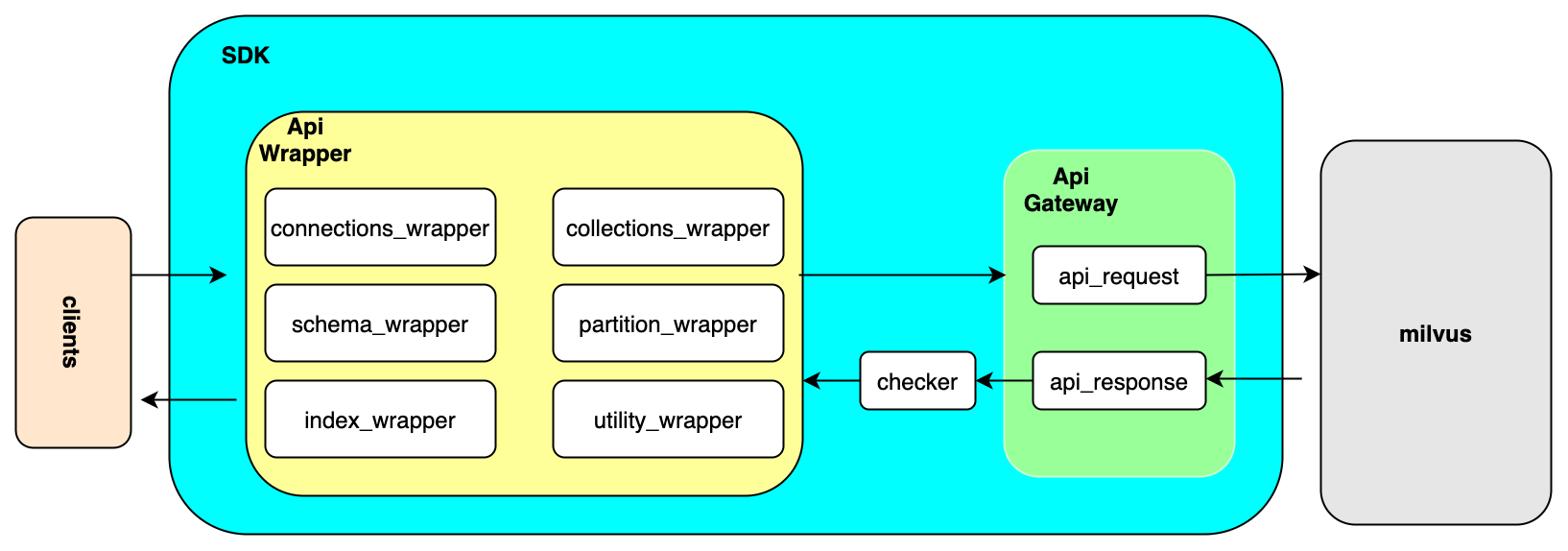

- base/*_wrapper.py encapsulates the tested interface, uniformly processes requests from the interface, abstracts the returned results, and passes the results to check/func_check.py module for checking.

- check/func_check.py encompasses result checking methods for each interface for invocation from test cases.

- base/client_base.py uses pytest framework to process setup/teardown functions correspondingly.

- Test case files in testcases folder should be compiled inheriting the TestcaseBase module from base/client_base.py. Compile the common methods and parameters used by test cases into the Common module for invocation.

- Add global configurations under config directory, such as log path, etc.

- Add global implementation methods under utils directory, such as utility log module.

Adding codes

This section specifies references while adding new test cases or framework tools.

Notice and best practices

- Coding style

-

Test files: each SDK category corresponds to a test file. So do

loadandsearchmethods. -

Test categories: test files fall into two categories

TestObjectParams:- Indicates the parameter test of corresponding interface. For instance,

TestPartitionParamsrepresents the parameter test for Partition interface. - Tests the target category/method under different parameter inputs. The parameter test will cover

default,empty,none,datatype,maxsize, etc.

- Indicates the parameter test of corresponding interface. For instance,

TestObjectOperations:- Indicates the function/operation test of corresponding interface. For instance,

TestPartitionOperationsrepresents the function/operation test for Partition interface. - Tests the target category/method with legit parameter inputs and interaction with other interfaces.

- Indicates the function/operation test of corresponding interface. For instance,

-

Testcase naming

-

TestObjectParams:- Name after the parameter input of the test case. For instance,

test_partition_empty_name()represents test on performance with the empty string as thenameparameter input.

- Name after the parameter input of the test case. For instance,

-

TestObjectOperations- Name after the operation procedure of the test case. For instance,

test_partition_drop_partition_twice()represents the test on the performance when dropping partitions twice consecutively. - Name after assertions. For instance,

test_partition_maximum_partitions()represents test on the maximum number of partitions that can be created.

- Name after the operation procedure of the test case. For instance,

-

- Notice

- Do not initialize PyMilvus objects in the test case files.

- Generally, do not add log IDs to test case files.

- Directly call the encapsulated methods or attributes in test cases, as shown below:

To create multiple partitions objects, call

self.init_partition_wrap(), which returns the newly created partition objects. Callself.partition_wrapinstead when you do not need multiple objects.

# create partition -Call the default initialization method

partition_w = self.init_partition_wrap()

assert partition_w.is_empty

# create partition -Directly call the encapsulated object

self.partition_wrap.init_partition(collection=collection_name, name=partition_name)

assert self.partition_wrap.is_empty

-

To test on the error or exception returned from interfaces:

- Call

check_task=CheckTasks.err_res. - Input the expected error ID and message.

# create partition with collection is None self.partition_wrap.init_partition(collection=None, name=partition_name, check_task=CheckTasks.err_res, check_items={ct.err_code: 1, ct.err_msg: "'NoneType' object has no attribute"}) - Call

-

To test on the normal value returned from interfaces:

- Call

check_task=CheckTasks.check_partition_property. You can build new test methods inCheckTasksfor invocation in test cases. - Input the expected result for test methods.

# create partition partition_w = self.init_partition_wrap(collection_w, partition_name, check_task=CheckTasks.check_partition_property, check_items={"name": partition_name, "description": description, "is_empty": True, "num_entities": 0}) - Call

- Adding test cases

-

Find the encapsulated tested interface with the same name in the *_wrapper.py files under base directory. Each interface returns a list with two values, among which one is interface returned results of PyMilvus, and the other is the assertion of normal/abnormal results, i.e.

True/False. The returned judgment can be used in the extra result checking of test cases. -

Add the test cases in the corresponding test file of the tested interface in testcases folder. You can refer to all test files under this directory to create your own test cases as shown below:

@pytest.mark.tags(CaseLabel.L1) @pytest.mark.parametrize("partition_name", [cf.gen_unique_str(prefix)]) def test_partition_dropped_collection(self, partition_name): """ target: verify create partition against a dropped collection method: 1. create collection1 2. drop collection1 3. create partition in collection1 expected: raise exception """ # create collection collection_w = self.init_collection_wrap() # drop collection collection_w.drop() # create partition failed self.partition_wrap.init_partition(collection_w.collection, partition_name, check_task=CheckTasks.err_res, check_items={ct.err_code: 4, ct.err_msg: "collection not found"}) -

Tips

- Case comments encompass three parts: object, test method, and expected result. You should specify each part.

- Initialize the tested category in the setup method of the Base category in the base/client_base.py file, as shown below:

self.connection_wrap = ApiConnectionsWrapper() self.utility_wrap = ApiUtilityWrapper() self.collection_wrap = ApiCollectionWrapper() self.partition_wrap = ApiPartitionWrapper() self.index_wrap = ApiIndexWrapper() self.collection_schema_wrap = ApiCollectionSchemaWrapper() self.field_schema_wrap = ApiFieldSchemaWrapper()- Pass the parameters with corresponding encapsulated methods when calling the interface you need to test on. As shown below, align all parameters with those in PyMilvus interfaces except for

check_taskandcheck_items.

def init_partition(self, collection, name, description="", check_task=None, check_items=None, **kwargs)check_taskis used to select the corresponding interface test method in the ResponseChecker check category in the check/func_check.py file. You can choose methods under theCheckTaskscategory in the common/common_type.py file.- The specific content of

check_itemspassed to the test method is determined by the implemented test methodcheck_task. - The tested interface can return normal results when

CheckTasksandcheck_itemsare not passed.

- Adding framework functions

-

Add global methods or tools under utils directory.

-

Add corresponding configurations under config directory.